AI is not neutral, it is just as biased as humans

The discourse around artificial intelligence is often polarizing - either exaggerating its positives and capabilities, or fear mongering about our impending doom at the hands of Skynet. We shouldn't let the real issues surrounding AI get lost in this sea of sensationalism, however. One common problem that we are already seeing is that AI is far from neutral. It can and does discriminate because it often inherits human biases.

The appeal of artificial intelligence is understandable. It can analyze vast amounts of data seamlessly and make predictions based on nothing but cold, hard facts. Or so we'd like to think. The truth is, however, that most training data that machine learning algorithms use today is constructed by humans or at least with human involvement. And since most current algorithms are not open source, we cannot know how comprehensive the information received by an AI is and whether all variables have been covered.

Existing and future problems

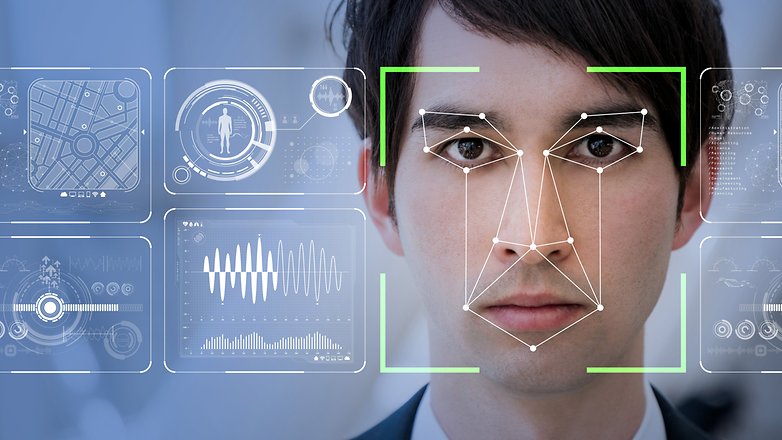

Why is this an issue? Because artificial intelligence is not just curating your Netflix recommendations or social media feeds, it's increasingly making decisions that can have a significant impact on your life. Machine learning algorithms are used to scan through resumes and select suitable job candidates, to predict recidivism rates and some are even attempting to spot future terrorists based on their facial features.

There already have been cases which showcase how AI can inherit human bias and further marginalize minorities or vulnerable groups. One of the best known examples is an experimental Amazon hiring tool discriminating against women. The algorithm rated candidates one to five stars and was supposed to select those most suitable for the job. However, it begun discriminating against women, because the data it was trained with mainly contained CVs from male candidates. It therefore concluded that women were not desirable and penalized applications with the word 'women' in them. Although the program was edited to make it neutral, the project was scrapped and never saw actual implementation.

A study by Pro Publica, on the other hand, discovered that COMPAS - an algorithm supposed to predict recidivism was racially biased. Their analysis "found that black defendants were far more likely than white defendants to be incorrectly judged to be at a higher risk of recidivism, while white defendants were more likely than black defendants to be incorrectly flagged as low risk."

Cases like these prove that explainability and transparency are immensely important in the field of AI. When a machine is making decisions about a person's future, we have to know what they are based on. In the case of COMPAS, it was using incarceration data. However, relying on historical data can have its issues - the machines will encounter historical patterns colored by gender, racial and other biases. Just because, for example, historically there may have been fewer male candidates for nurse positions, that does not mean men are less qualified or deserving.

There also are cases in which artificial intelligence can be right, but for the wrong reasons. According to the Washington Post, "A University of Washington professor shared the example of a colleague who trained a computer system to tell the difference between dogs and wolves. Tests proved the system was almost 100 percent accurate. But it turned out the computer was successful because it learned to look for snow in the background of the photos. All of the wolf photos were taken in the snow, whereas the dog pictures weren’t."

Finally, we have to discuss cases in which AI is used in combination with dubious science and data. An Israeli startup called Faception claims to be able to identify potential terrorists by their facial traits with the help of artificial intelligence. More worryingly, according to the Washington Post, they have already signed a contract with a home land security agency.

The company's CEO Shai Gilboa claims that “our personality is determined by our DNA and reflected in our face. It’s a kind of signal.” Faception uses 15 classifiers which can allegedly identify certain traits with 80% accuracy. However, these numbers are not assuring if you happen to fall into the 20%, which could be misidentified as a terrorist or pedophile. Not only that - the science behind it all is highly questionable. Alexander Todorov, a Princeton psychology professor told the Post that “the evidence that there is accuracy in these judgments is extremely weak”. The company also refuses to make its classifiers public. It's not hard to see how this can have disastrous consequences.

Potential solutions

One company, which is openly addressing the issue of AI bias is IBM. It has developed new methodology designed to reduce existing discrimination in a data set. The goal is for a person to have a similar chance of 'receiving a favorable decision', for a loan for example, irrespective of their membership of a 'protected' or 'unprotected group'.

However, IBM researcher Francesca Rossi has also placed emphasis on changes that the industry itself needs to make. According to her, the development of artificial intelligence needs to happen in multidisciplinary environments and with a multicultural approach. She also believes that the more we learn about AI bias, the more we learn about our own.

Francesca Rossi is also quite optimistic - she claims that AI bias can be eliminated within the next five years. Yet, it's not easy to agree with her. A significant cultural shift must occur in the tech industry first. Nevertheless, the multidisciplinary approach she proposes could push this change. It's also sorely needed - many developers and researchers are not formally trained in ethics or related fields.

Another step towards solving the problem of AI bias could be its 'democratization' - making algorithms available to the public, so they can study, understand and use them better. Companies like OpenAI are already on this path. However, those protective of their intellectual property are not likely to follow.

As you can see, there is no easy answer. One thing is certain, however - we shouldn't blindly trust technology and expect it to solve all of our problems. Like anything else, it was not created in a vacuum. It is a product of society and therefore inherits all of our human biases and preconceptions.

What are your thoughts on the subject? Let us know in the comments.

I am not certain if humans would be able to fully control AI at some point in the future. It is superior to us in so many ways. The great physisist Stephen Hawking did warn us all about it. I hope we’ll take his advice.

I saved this article for future reference. Let's see in coming 10 years from present how the things will turn out for AI. I am very interested to see what kind of relationship people of the society and AI machines will develop with each other.